Visual Explanations of Speech Recognition Systems

Theoretical (Analytical):

Practical (Implementation):

Literature Work:

Overview

Speech recognition captures more and more areas in our daily life, from in-car navigation and smartphone assistants to in-house assistants for daily queries.

However, training and maintaining such models is not trivial in many cases. Especially for speech recognition in court situations, the model needs to explain itself to improve debugging and trust from developers and users.

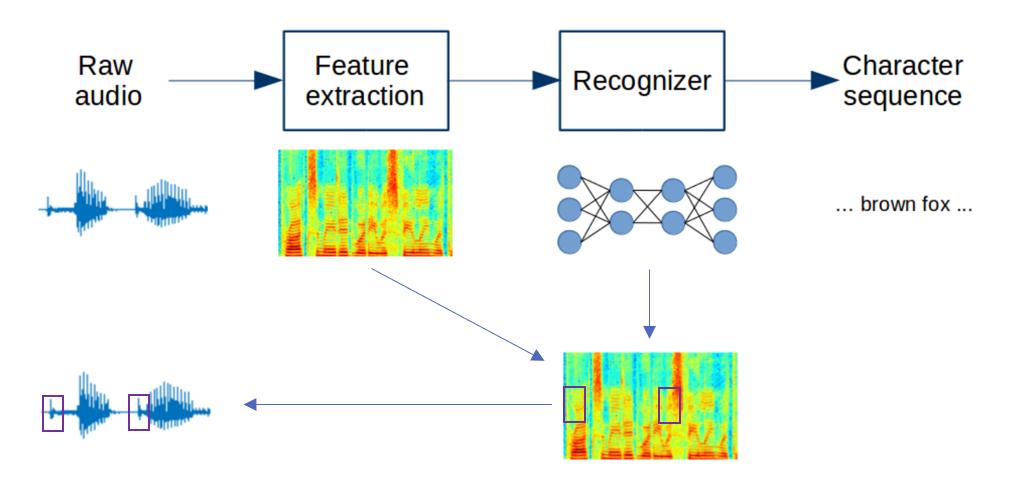

In this project, you should first create a small speech recognition model and then build a visual analytics system to facilitate understanding such complex models.

Problem Statement

- How can we use visually explain the decisions of a speech recognition system?

- How can we visually transfer explanations of a deep learning speech recognition model to users and domain experts?

Tasks

- Design and implement an explanation system for speech recognition

- Implement different visualization techniques to explain models

- Address explanation transfer issues of different user groups

- Identify relevant features a model uses for the prediction

Requirements

- High interest in the topic, deep learning knowledge is preferred

- Basic knowledge about information visualization and deep learning

- Good programming skills: HTML, JavaScript, D3.js, Python, Pytorch

Scope/Duration/Start

- Scope: Bachelor/Master

- 3 Month Project, 3/6 Month Thesis

- Start: immediately

Contact

References

Mishra, S., Sturm, B. L., & Dixon, S. (2017, October). Local interpretable model-agnostic explanations for music content analysis. In ISMIR (Vol. 53, pp. 537-543).